In most cases the "Crawled - currently not indexed" issue is only a temporary problem. I understand that it can be frustrating to see that your pages are not indexed in Google Search. That's why I've written this blog post to provide you with some tips on how to fix the issue, should it persist.

|

| how to fix the "Crawled - currently not indexed" issue:image credit aminout.com |

What is the "Crawled - currently not indexed" issue?

The "Crawled - currently not indexed" status in Google Search Console means that Google has visited a page on your website but has not yet decided to include it in its index. This can happen for a number of reasons

Crawled - currently not indexed the "Discovered - currently not indexed" status is a more specific and accurate description of the problem.

|

| A screenshot of the "Pages" section in Google Search Console, with the "Discovered - currently not indexed" |

Page not indexed: Discovered - currently not indexed: is a status in Google Search Console that indicates that Google has found a page on your website but has not yet decided to index it. This can happen for a number of reasons, such as technical issues with the page, low-quality content, or duplicate content.

If you see the "Discovered - currently not indexed" status for a page that you want to be indexed, there are a few things you can do to fix it:

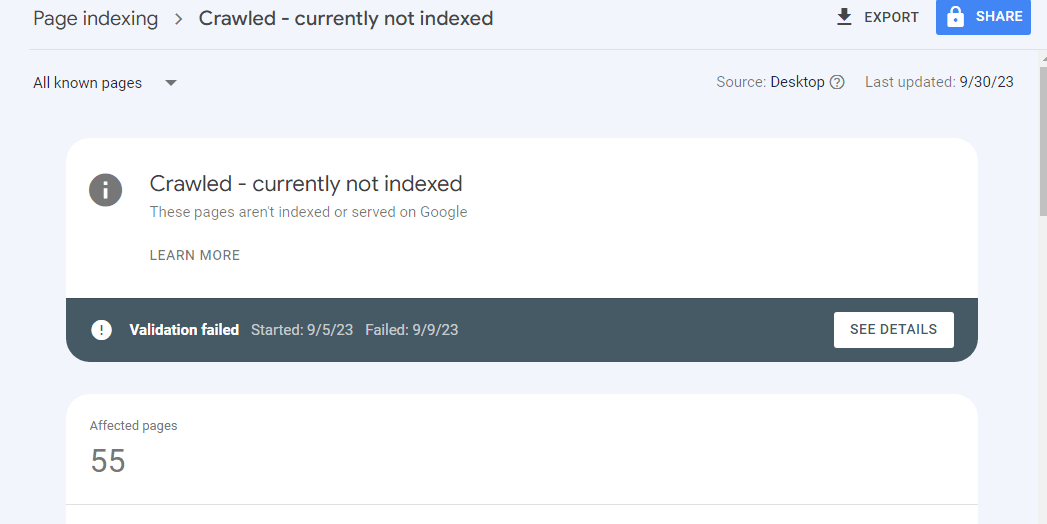

The "Discovered - currently not indexed" section in Google Search Console shows a list of pages that Google has found but has not yet indexed. This is often a temporary problem, but you can follow the instructions below to ensure that it has been resolved.

Instructions:

Go to Google Search Console.

Within the side menu, click on the "Pages" section.

Click on the "Discovered - currently not indexed" section.

|

| example of link indexed but it's on liste carwled |

Review the list of pages and identify any pages that you want Google to index.

|

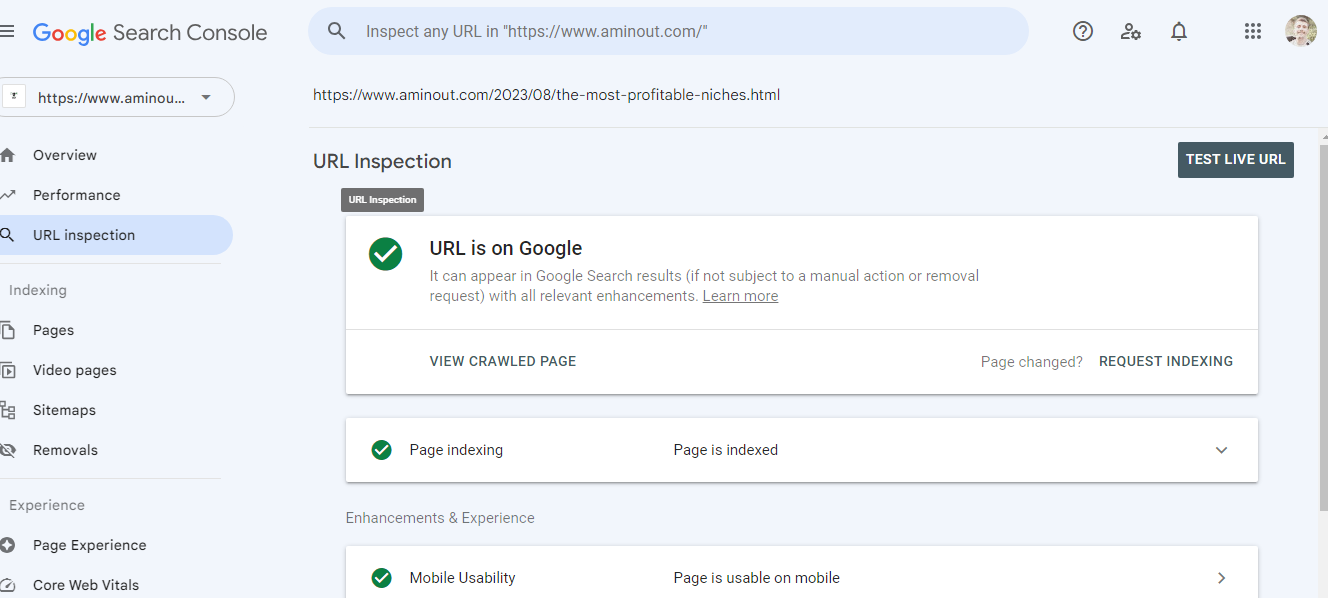

| prof of indexation of link |

Here is the example: There is nothing we can fix !

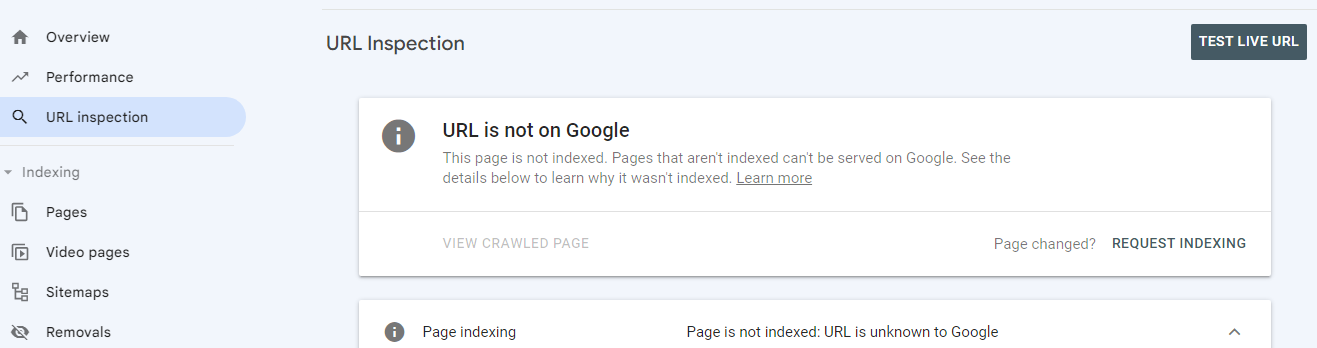

Ok ! now in case the page appears as not indexed like this 👇

The reasons why a page might not be indexed?

There are a number of reasons why a page might not be indexed by Google. Some of the most common reasons include:

- The page is new and Google has not had time to index it yet.

- Technical issues: If a page has technical issues, such as broken links, 404 errors, or robots.txt blocking, Google may not be able to crawl or index the page.

- Low-quality content: Google is less likely to index pages with low-quality content, such as thin content, duplicate content, or content that is not relevant to the page's URL.

- Duplicate content: Google avoids indexing duplicate content, so if your page is a copy of another page on the web, it may not be indexed.

- Google has decided that the page is not relevant to its users and has chosen not to index it.

If you are seeing the "Crawled - currently not indexed" status for a page that you want to be indexed, there are a few things you can do to try to fix it:

1- Check for technical issues. Make sure that the page does not have any technical issues, such as broken links, 404 errors, or robots.txt blocking.

Technical issues are one of the most common reasons why pages are not indexed by Google. These issues can make it difficult for Google to crawl and index your pages, or they can prevent Google from understanding the content of your pages.

Here are some examples of technical issues that can prevent a page from being indexed:

Broken links: Broken links are links that point to pages that no longer exist. When Google encounters a broken link, it may not be able to crawl the rest of the page.

404 errors: 404 errors occur when a user tries to access a page that does not exist. 404 errors can be caused by a number of things, such as typographical errors in URLs or pages that have been moved or deleted.

Robots.txt blocking: Robots.txt is a file that tells search engines which pages on your website they should crawl and index. If a page is blocked by robots.txt, Google will not be able to crawl or index the page.

To check for technical issues, you can use a tool like Google Search Console or Ahrefs Site Audit. These tools will scan your website for broken links, 404 errors, and other technical issues.

Once you have identified any technical issues, you need to fix them. For example, you can fix broken links by replacing them with valid links. You can fix 404 errors by redirecting users to a valid page. And you can unblock pages from robots.txt by removing them from the file.

Once you have fixed the technical issues, you can request indexing for the affected pages in Google Search Console.

fixing technical issues, you can help to ensure that your pages are properly indexed in Google Search.

2-The page has low-quality content, such as thin content, duplicate content, or content that is not relevant to the page's URL:

Low-quality content is one of the main reasons why Google does not index pages. Google wants to provide users with the best possible search results, so it only indexes pages that contain high-quality content.

Different types of low-quality content:

- Thin content: Thin content is content that is not very informative or detailed. It may be short, poorly written, or contain duplicate content.

- Duplicate content: Duplicate content is content that appears on multiple pages of a website or on multiple websites. Google only wants to index one version of each page, so it will not index pages with duplicate content.

- Content that is not relevant to the page's URL: The content on a page should be relevant to the page's URL. If the content is not relevant to the URL, Google may not index the page.

tips for avoiding low-quality content:

- Write high-quality content that is informative and engaging.

- Make sure that your content is original and not duplicated from other sources.

- Make sure that the content on each page is relevant to the page's URL.

You can read my article about Tips for Analyzing Keyword Difficulty and Optimizing Your Content to learn more about how to write high-quality content that is relevant to your target audience.

Here are some additional tips for optimizing your content for search engines:

- Use relevant keywords throughout your content, including in the title, headings, and body text.

- Make sure that your content is well-structured and easy to read.

- Use images and videos to break up your text and make your content more visually appealing.

- Promote your content on social media and other websites

For each page that you want Google to index, click on the "Request indexing" button.

Google will then re-crawl and index the page. This process may take a few days or weeks.

Once the page has been indexed, it will appear in Google Search results.

3-How to fix the "Discovered - currently not indexed" status in the context of Google's Spam Policies

Google's Spam Policies for Web Search are designed to ensure that users have a positive experience when searching the web. These policies prohibit a variety of spammy behaviors, including keyword stuffing, cloaking, and link spam.

If you see the "Discovered - currently not indexed" status for a page that you want to be indexed, it is important to check that the page complies with Google's Spam Policies. If the page violates any of the spam policies, Google may have chosen not to index it.

Here are some specific things to check:

- Make sure that the page is not keyword stuffed. Keyword stuffing is the practice of overloading a page with keywords in an attempt to rank higher in search results.

- Make sure that the page is not cloaked. Cloaking is the practice of presenting different content to users and search engines.

- Make sure that the page is not involved in link spam. Link spam is the practice of building links to your website in ways that violate Google's guidelines.

If you find that your page violates any of Google's Spam Policies, you should fix the issue and then request indexing for the page.

Source: Google Search Essentials: https://developers.google.com/search/docs/essentials/spam-policies

Here are some additional tips for fixing the "Crawled - currently not indexed" issue:

- Keep your sitemap up-to-date. This will help Google to discover new pages on your website and index them quickly.

- Use canonical tags. Canonical tags tell Google which version of a page is the preferred version. This can help to prevent duplicate content issues.

- Be patient. It can take some time for Google to index new pages.

Conclusion:

The "Crawled - currently not indexed" status is a common issue, but it's one that can be fixed with a little effort. By following the tips above, you can help to ensure that your pages are properly indexed in Google Search and that you're getting the most out of your website traffic.

As a blogger, I've learned that the "Crawled - currently not indexed" status is just a temporary setback. By following the tips above, I've been able to fix this issue for my own blog and get my pages indexed in Google Search. I encourage you to do the same, and don't give up on your pages too quickly.